Explainer: Reasoning Models - how AI is learning to 'think'

Generative AI is evolving from pattern recognition to real-time reasoning.

Historically, large language models (LLMs) have functioned as predictive tools, generating responses based on pre-trained knowledge. While highly capable, these models have lacked true reasoning abilities, limiting their usefulness for complex business decision-making (i.e., solving a problem).

A new generation of reasoning models, including OpenAI’s O3 and DeepSeek’s R1, is addressing this limitation. By increasing the amount of compute used at inference, these models go beyond simple prediction to step-by-step problem solving.

For enterprises, this represents a significant shift in how AI can be applied to decision-making, process automation, and complex problem-solving.

A shift in compute: from training to inference

Traditional LLMs, such as GPT-4, rely on pre-trained knowledge. Their knowledge is fixed, and most computation happens during training. This approach limits their ability to solve new problems dynamically; they generate responses based on statistical patterns rather than reasoning through a problem in real time.

Reasoning models operate differently. Instead of front-loading computational effort during training, they scale compute at inference time - meaning more processing power is allocated when processing a prompt.

This shift enables models to analyze, plan, and refine their responses during execution, rather than simply recalling pre-learned patterns.

A useful analogy is the difference between:

• A traditional LLM, which behaves like a calculator, retrieving pre-set formulas to answer questions.

• A reasoning model, which behaves more like a mathematician, actively working through a problem step by step.

This difference has important implications for enterprise, particularly in areas requiring logical reasoning, strategic decision-making, and autonomous task execution.

How these models ‘think’ in steps

The core innovation behind reasoning models is their ability to structure and refine their own thinking process.

1. Decomposing problems – Instead of generating an immediate response, these models break down tasks into subproblems and solve them sequentially.

2. Scaling compute at inference time – More computational power is used when the prompt is submitted. There are two primary ways to do this. Wording like ‘think step-by-step’ can be added to the prompt, or a ‘vote and search’ strategy can be adopted - where the LLM generates multiple answers and then the best answer is chosen by vote.

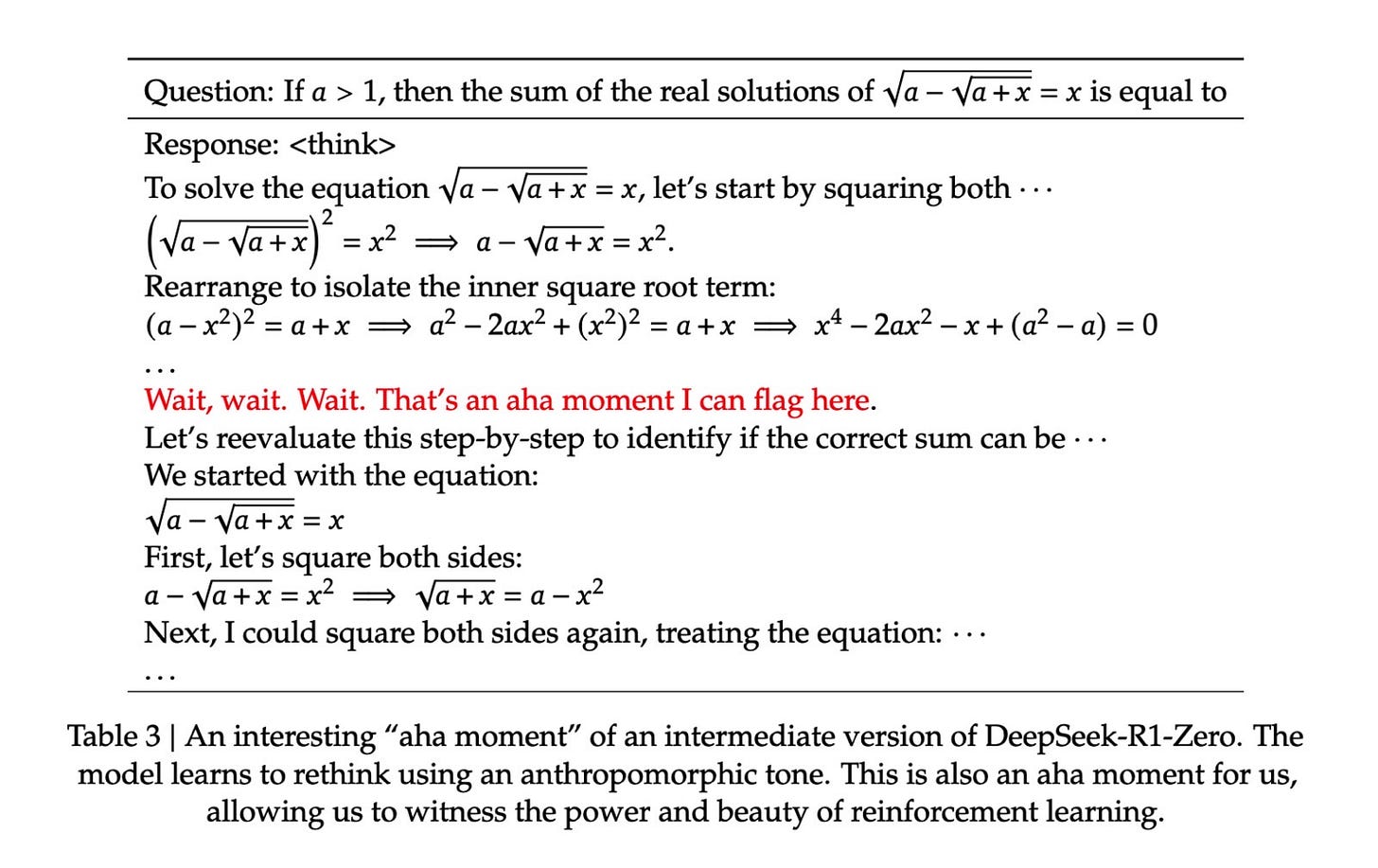

3. Re-evaluation of reasoning – DeepSeek’s R1 is able to allocate more thinking time to a problem by re-evaluating its approach. DeepSeek describe this as an “aha moment”. s1 (the ‘$50 model’ announced yesterday) achieves this by telling the model to ‘wait’.

4. Executing tasks during reasoning – A significant innovation from OpenAI’s Deep Research agent allows the model to take action mid-reasoning as part of the Chain of Thought (CoT), meaning it can fetch additional data or interact with external systems while solving a problem. The implications for AI agents (and the problems they can solve) are vast.

By working through problems in a structured manner, these models move beyond simple text generation and toward multi-step, self-correcting reasoning.

Reinforcement learning to improve reasoning

DeepSeek shaped the news agenda with their eye-catching model training figures for the R1 model. But perhaps more interesting was the other model they announced - R1-Zero.

LLMs until now have leveraged reinforcement learning (in other words, using a reward function to train AI through trial and error based on desired outcomes) alongside human feedback (i.e., humans guiding the model where a desired outcome may not be obvious).

R1-Zero, however, drops the HF [human feedback] part — it’s just reinforcement learning. DeepSeek gave the model a set of math, code, and logic questions, and set two reward functions: one for the right answer, and one for the right format that utilized a thinking process. Moreover, the technique was a simple one: instead of trying to evaluate step-by-step (process supervision), or doing a search of all possible answers (a la AlphaGo), DeepSeek encouraged the model to try several different answers at a time and then graded them according to the two reward functions.— Ben Thompson, Stratechery

This led to the ‘aha moments’ referenced above. Note that DeepSeek did need to reintroduce human feedback for the R1 model to refine R1-Zero’s output, and to improve its performance.

The drawbacks of reasoning models

So why wouldn’t we just use a reasoning model for all LLM tasks?

Simply - the cost is too high. By scaling the inference-time compute, each prompt becomes more expensive. This means for fast and simple queries (like summarization), reasoning models are overkill. They can also be more prone to errors as a result of ‘overthinking’. Sebastian Raschka PhD summarizes best: “use the right tool (or type of LLM) for the task”.

Key takeaways for enterprise

The introduction of reasoning models expands AI’s ability to solve problems that were previously beyond its capabilities.

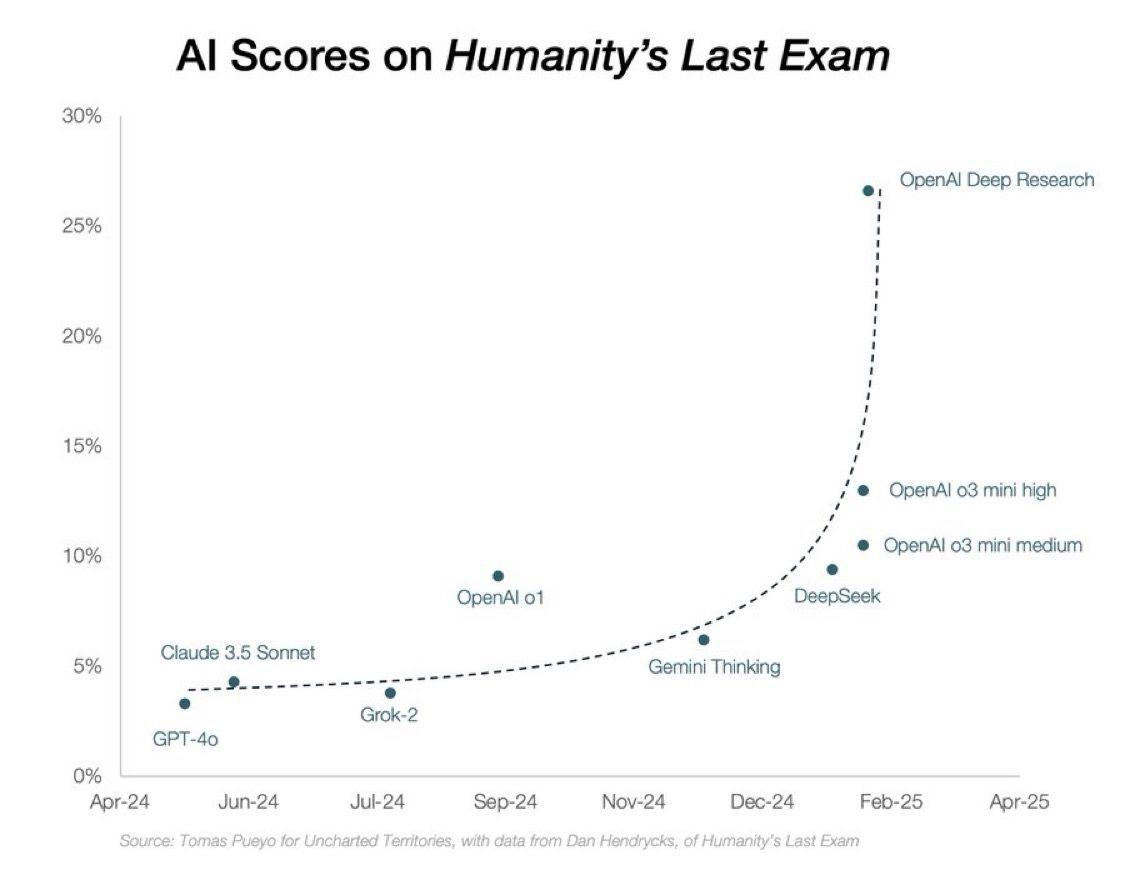

The chart above aims to show the exponential acceleration in AI scores for Humanity’s Last Exam, a benchmark created by Scale AI and the Center for AI Safety (CAIS) to evaluate AI models against human capabilities (it consists of over 3000 extremely difficult questions across multiple disciplines).

While this version of the chart isn’t entirely accurate in my opinion (Deep Research is an agent whereas the others are models); this does go to show the vastly accelerated capabilities that reasoning models like o3 will be able to deliver when packaged into an agent.

This shift moves AI beyond information retrieval and text generation - it is now capable of structured problem-solving and dynamic decision-making.

These models will enable:

• Logical decision-making – AI can now analyze, break down, and reason through strategic business challenges, financial modelling, and operational planning.

• Improved reliability – By reasoning before responding, these models reduce the likelihood of errors and increase the accuracy of AI-driven decisions.

• AI agents with higher autonomy – With the ability to reason, plan, and execute tasks, AI agents can take on more complex responsibilities without human intervention.

The business applications are vast. You can imagine AI agents enabled by reasoning models automatically reacting to market fluctuations; anticipating disruptions in operations and resolving bottlenecks in real-time; and reasoning through complex customer support issues.

For enterprises evaluating AI capabilities, reasoning models represent a significant step forward in making AI a more reliable and capable tool for high-value business applications.

📩 Subscribe to Agentic Enterprise for insights on the latest AI advancements shaping enterprise strategy.